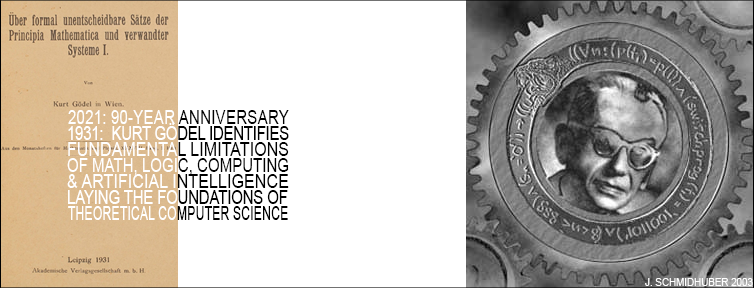

Abstract. In 2021, we are celebrating the 90th anniversary of Kurt Gödel's groundbreaking 1931 paper which laid the foundations of theoretical computer science and the theory of artificial intelligence (AI). Gödel sent shock waves through the academic community when he identified the fundamental limits of theorem proving, computing, AI, logics, and mathematics itself. This had enormous impact on science and philosophy of the 20th century. Ten years to go until the Gödel centennial in 2031!

In the early 1930s, Kurt Gödel articulated the mathematical foundation and limits of computing, computational theorem proving, and logic in general.[GOD][GOD34][GOD21,21a] Thus he became the father of modern theoretical computer science and AI theory.

In the early 1930s, Kurt Gödel articulated the mathematical foundation and limits of computing, computational theorem proving, and logic in general.[GOD][GOD34][GOD21,21a] Thus he became the father of modern theoretical computer science and AI theory.

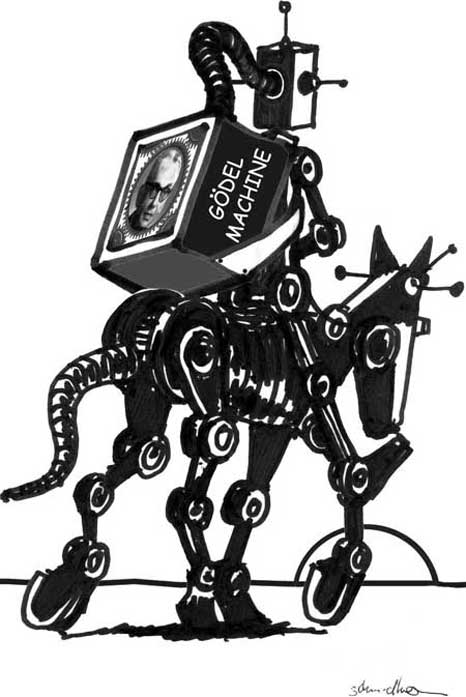

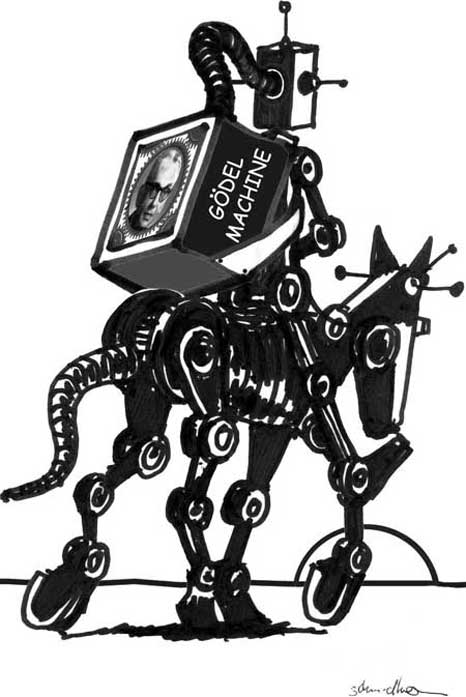

Gödel introduced a universal language to encode arbitrary formalizable processes.[GOD][GOD34] It was based on the integers, and allows for formalizing the operations of any digital computer in axiomatic form (this also inspired my much later self-referential Gödel Machine[GM6]). Gödel used his so-called Gödel Numbering to represent both data (such as axioms and theorems) and programs[VAR13] (such as proof-generating sequences of operations on the data).

Gödel famously constructed formal statements that talk about the computation of other formal statements—especially self-referential statements which imply that they are not decidable, given a computational theorem prover that systematically enumerates all possible theorems from an enumerable set of axioms. Thus he identified fundamental limits of algorithmic theorem proving, computing, and any type of computation-based AI[GOD] (some misunderstood his result and thought he showed that humans are superior to AIs[BIB3]). Much of early AI in the 1940s-70s was about theorem proving[ZU48][NS56] and deduction in Gödel style through expert systems and logic programming.

Like most great scientists, Gödel built on earlier work. He combined Georg Cantor's diagonalization trick[CAN] (which showed in 1891 that there are different types of infinities) with the foundational work by Gottlob Frege[FRE] (who introduced the first formal language in 1879), Thoralf Skolem[SKO23] (who introduced primitive recursive functions in 1923) and Jacques Herbrand[GOD86] (who identified limitations of Skolem's approach). These authors in turn built on the formal Algebra of Thought (1686) by Gottfried Wilhelm Leibniz,[L86][WI48] which is deductively equivalent[LE18] to the later Boolean Algebra of 1847.[BOO] Leibniz, one of the candidates for the title of "father of computer science,"[LEI21,21a] has been called "the world's first computer scientist"[LA14] and even "the smartest man who ever lived."[SMO13] He described the principles of binary computers governed by punch cards (1679).[L79][LA14][HO66][L03][IN08][SH51][LEI21,21a] In 1673, he designed the first physical hardware (the step reckoner) that could perform all four arithmetic operations, and the first with a memory,[BL16] going beyond the first automatic gear-based data-processing calculators by Wilhelm Schickard (1623) and Blaise Pascal (1642). Leibniz was not only the first to publish infinitesimal calculus,[L84] but also pursued an ambitious project to answer all possible questions through computation. His ideas on a universal language and a general calculus for reasoning were extremely influential (Characteristica Universalis & Calculus Ratiocinator,[WI48] inspired by the 13th century scholar Ramon Llull[LL7]). Leibniz' "Calculemus!" is one of the defining quotes of the age of enlightenment: "If controversies were to arise, there would be no more need of disputation between two philosophers than between two accountants. For it would suffice to take their pencils in their hands, to sit down with their slates and say to each other [...]: Let us calculate!"[RU58] In 1931, however, Gödel showed that there are fundamental limitations to what is decidable or computable in this way.[GOD][MIR](Sec. 18)

Like most great scientists, Gödel built on earlier work. He combined Georg Cantor's diagonalization trick[CAN] (which showed in 1891 that there are different types of infinities) with the foundational work by Gottlob Frege[FRE] (who introduced the first formal language in 1879), Thoralf Skolem[SKO23] (who introduced primitive recursive functions in 1923) and Jacques Herbrand[GOD86] (who identified limitations of Skolem's approach). These authors in turn built on the formal Algebra of Thought (1686) by Gottfried Wilhelm Leibniz,[L86][WI48] which is deductively equivalent[LE18] to the later Boolean Algebra of 1847.[BOO] Leibniz, one of the candidates for the title of "father of computer science,"[LEI21,21a] has been called "the world's first computer scientist"[LA14] and even "the smartest man who ever lived."[SMO13] He described the principles of binary computers governed by punch cards (1679).[L79][LA14][HO66][L03][IN08][SH51][LEI21,21a] In 1673, he designed the first physical hardware (the step reckoner) that could perform all four arithmetic operations, and the first with a memory,[BL16] going beyond the first automatic gear-based data-processing calculators by Wilhelm Schickard (1623) and Blaise Pascal (1642). Leibniz was not only the first to publish infinitesimal calculus,[L84] but also pursued an ambitious project to answer all possible questions through computation. His ideas on a universal language and a general calculus for reasoning were extremely influential (Characteristica Universalis & Calculus Ratiocinator,[WI48] inspired by the 13th century scholar Ramon Llull[LL7]). Leibniz' "Calculemus!" is one of the defining quotes of the age of enlightenment: "If controversies were to arise, there would be no more need of disputation between two philosophers than between two accountants. For it would suffice to take their pencils in their hands, to sit down with their slates and say to each other [...]: Let us calculate!"[RU58] In 1931, however, Gödel showed that there are fundamental limitations to what is decidable or computable in this way.[GOD][MIR](Sec. 18)

In 1935, Alonzo Church derived a corollary / extension of Gödel's result by showing that Hilbert & Ackermann's famous Entscheidungsproblem (decision problem) does not have a general solution.[CHU] To do this, he used his alternative universal coding language called Untyped Lambda Calculus, which forms the basis of the highly influential programming language LISP.

In 1935, Alonzo Church derived a corollary / extension of Gödel's result by showing that Hilbert & Ackermann's famous Entscheidungsproblem (decision problem) does not have a general solution.[CHU] To do this, he used his alternative universal coding language called Untyped Lambda Calculus, which forms the basis of the highly influential programming language LISP.

In 1936, Alan Turing introduced yet another universal model which has become perhaps the most well-known of them all (at least in computer science): the Turing Machine.[TUR] He rederived the above-mentioned result.[T20](Sec. IV) Of course, he cited both Gödel and Church in his 1936 paper[TUR] (whose corrections appeared in 1937). In the same year of 1936, Emil Post published yet another independent universal model of computing,[POS] also citing Gödel and Church. Today we know many such models. Nevertheless, according to Wang,[WA74-96] it was Turing's work (1936) that convinced Gödel of the universality of both his own approach (1931-34) and Church's (1935).

What exactly did Post[POS] and Turing[TUR] do in 1936 that hadn't been done earlier by Gödel[GOD][GOD34] (1931-34) and Church[CHU] (1935)? There is a seemingly minor difference whose significance emerged only later. Many of Gödel's instruction sequences were series of multiplications of number-coded storage contents by integers. Gödel did not care that the computational complexity of such multiplications tends to increase with storage size. Similarly, Church also ignored the spatio-temporal complexity of the basic instructions in his algorithms. Turing and Post, however, adopted a traditional, reductionist, minimalist, binary view of computing—just like Konrad Zuse (1936).[ZU36] Their machine models permitted only very simple elementary instructions with constant complexity, like the early binary machine model of Leibniz (1679).[L79][LA14][HO66]

What exactly did Post[POS] and Turing[TUR] do in 1936 that hadn't been done earlier by Gödel[GOD][GOD34] (1931-34) and Church[CHU] (1935)? There is a seemingly minor difference whose significance emerged only later. Many of Gödel's instruction sequences were series of multiplications of number-coded storage contents by integers. Gödel did not care that the computational complexity of such multiplications tends to increase with storage size. Similarly, Church also ignored the spatio-temporal complexity of the basic instructions in his algorithms. Turing and Post, however, adopted a traditional, reductionist, minimalist, binary view of computing—just like Konrad Zuse (1936).[ZU36] Their machine models permitted only very simple elementary instructions with constant complexity, like the early binary machine model of Leibniz (1679).[L79][LA14][HO66]  They did not exploit this back then—for example, in 1936, Turing used his (quite inefficient) model only to rephrase the results of Gödel and Church on the limits of computability. Later, however, the simplicity of these machines made them a convenient tool for theoretical studies of complexity. (I also happily used and generalized them for the case of never-ending computations.[ALL2])

They did not exploit this back then—for example, in 1936, Turing used his (quite inefficient) model only to rephrase the results of Gödel and Church on the limits of computability. Later, however, the simplicity of these machines made them a convenient tool for theoretical studies of complexity. (I also happily used and generalized them for the case of never-ending computations.[ALL2])

The Gödel Prize for theoretical computer science is named after Gödel. The currently more lucrative ACM A. M. Turing Award was created in 1966 for contributions "of lasting and major technical importance to the computer field." It is funny—and at the same time embarrassing—that Gödel (1906-1978) never got one, although he not only laid the foundations of the "modern" version of the field, but also identified its most famous open problem "P=NP?" in his famous letter to John von Neumann (1956).[GOD56][URQ10]

The formal models of Gödel (1931-34), Church (1935), Turing (1936), and Post (1936) were theoretical pen & paper constructs that cannot directly serve as a foundation for practical computers. Remarkably, Konrad Zuse's patent application[ZU36-38][Z36][RO98] for the first practical general-purpose program-controlled computer also dates back to 1936. It describes general digital circuits (and predates Claude Shannon's 1937 thesis on digital circuit design[SHA37]). Then, in 1941, Zuse completed Z3, the world's first practical, working, programmable computer (based on the 1936 application). Ignoring the inevitable storage limitations of any physical computer, the physical hardware of Z3 was indeed universal in the "modern" sense of Gödel, Church, Turing, and Post—simple arithmetic tricks can compensate for Z3's lack of an explicit conditional jump instruction.[RO98] Zuse also created the first high-level programming language (Plankalkül)[BAU][KNU] in the early 1940s. He applied it to chess in 1945[KNU] and to theorem proving in 1948.[ZU48]

The formal models of Gödel (1931-34), Church (1935), Turing (1936), and Post (1936) were theoretical pen & paper constructs that cannot directly serve as a foundation for practical computers. Remarkably, Konrad Zuse's patent application[ZU36-38][Z36][RO98] for the first practical general-purpose program-controlled computer also dates back to 1936. It describes general digital circuits (and predates Claude Shannon's 1937 thesis on digital circuit design[SHA37]). Then, in 1941, Zuse completed Z3, the world's first practical, working, programmable computer (based on the 1936 application). Ignoring the inevitable storage limitations of any physical computer, the physical hardware of Z3 was indeed universal in the "modern" sense of Gödel, Church, Turing, and Post—simple arithmetic tricks can compensate for Z3's lack of an explicit conditional jump instruction.[RO98] Zuse also created the first high-level programming language (Plankalkül)[BAU][KNU] in the early 1940s. He applied it to chess in 1945[KNU] and to theorem proving in 1948.[ZU48]

It should be mentioned that practical AI is much older than Gödel's theoretical analysis of the fundamental limitations of AI. In 1914, the Spaniard Leonardo Torres y Quevedo was the 20th century's first pioneer of practical AI when he built the first working chess end game player (back then chess was considered as an activity restricted to the realms of intelligent creatures). The machine was still considered impressive decades later when the AI pioneer Norbert Wiener played against it at the 1951 Paris conference,[AI51][BRO21] [BRU1-4] which is now often viewed as the first conference on AI—though the expression "AI" was coined only later in 1956 at another conference in Dartmouth by John McCarthy. In fact, in 1951, much of what's now called AI was still called Cybernetics, with a focus very much in line with modern AI based on deep neural networks.[DL1-2][DEC]

Likewise, it should be mentioned that practical computer science is much older than Gödel's foundations of theoretical computer science (compare the comments on Leibniz above). Perhaps the world's first practical programmable machine was an automatic theatre made in the 1st century[SHA7a][RAU1] by Heron of Alexandria (who apparently also had the first known working steam engine—the Aeolipile). The energy source of his programmable automaton was a falling weight pulling a string wrapped around pins of a revolving cylinder. Complex instruction sequences controlling doors and puppets for several minutes were encoded by complex wrappings. The 9th century music automaton by the Banu Musa brothers in Baghdad was perhaps the first machine with a stored program.[BAN][KOE1] It used pins on a revolving cylinder to store programs controlling a steam-driven flute—compare Al-Jazari's programmable drum machine of 1206.[SHA7b] The first commercial program-controlled machines (punch card-based looms) were built in France around 1800 by Joseph-Marie Jacquard and others—perhaps the first "modern" programmers who wrote the world's first industrial software. They inspired Ada Lovelace and her mentor Charles Babbage (UK, circa 1840) who planned but was unable to build a non-binary, decimal, programmable, general purpose computer. The first general working programmable machine built by someone other than Zuse (1941)[RO98] was Howard Aiken's decimal MARK I (US, 1944).

Gödel has often been called the greatest logician since Aristotle.[GOD10] At the end of the previous millennium, TIME magazine ranked him as the most influential mathematician of the 20th century, although some mathematicians say his most important result was about logic and computing, not math. Others call it the fundamental result of theoretical computer science, a discipline that did not yet officially exist back then but effectively came about through Gödel's efforts. The Pulitzer Prize-winning popular book "Gödel, Escher, Bach"[H79] helped to inspire generations of young people to study computer science.

In 2021, we are not only celebrating the 90th anniversary of Gödel's famous 1931 paper but also the 80th anniversary of the world's first functional general-purpose program-controlled computer by Zuse (1941). It seems incredible that within less than a century something that once lived only in the minds of titans has become something so inalienable from modern society. The world owes these scientists a great debt. Ten years to go until the Gödel centennial in 2031, and twenty years until the Zuse centennial in 2041! Enough time to plan appropriate celebrations.

Acknowledgments

Thanks to Moshe Vardi, Herbert Bruderer, Jack Copeland, Wolfgang Bibel, Teun Koetsier, Scott Aaronson, Dylan Ashley, Sebastian Oberhoff, Kai Hormann, and several other expert reviewers for useful comments. Since science is about self-correction, let me know under juergen@idsia.ch if you can spot any remaining error. The contents of this article may be used for educational and non-commercial purposes, including articles for Wikipedia and similar sites. This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

Thanks to Moshe Vardi, Herbert Bruderer, Jack Copeland, Wolfgang Bibel, Teun Koetsier, Scott Aaronson, Dylan Ashley, Sebastian Oberhoff, Kai Hormann, and several other expert reviewers for useful comments. Since science is about self-correction, let me know under juergen@idsia.ch if you can spot any remaining error. The contents of this article may be used for educational and non-commercial purposes, including articles for Wikipedia and similar sites. This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

References

[GOD] K. Gödel. Über formal unentscheidbare Sätze der Principia Mathematica und verwandter Systeme I. Monatshefte für Mathematik und Physik, 38:173-198, 1931.

[GOD34] K. Gödel (1934). On undecidable propositions of formal mathematical systems. Notes by S. C. Kleene and J. B. Rosser on lectures at the Institute for Advanced Study, Princeton, New Jersey, 1934, 30 pp. (Reprinted in M. Davis, (ed.), The Undecidable. Basic Papers on Undecidable Propositions, Unsolvable Problems, and Computable Functions, Raven Press, Hewlett, New York, 1965.)

[GOD56] R. J. Lipton and K. W. Regan. Gödel's lost letter and P=NP. Link.

[GOD86] K. Gödel. Collected works Volume I: Publications 1929-36, S. Feferman et. al., editors, Oxford Univ. Press, Oxford, 1986.

[GOD10] V. C. Nadkarni. Gödel, Einstein and proof for God. The Economic Times, 2010.

[URQ10] A. Urquhart. Von Neumann, Gödel and complexity theory. Bulletin of Symbolic Logic 16.4 (2010): 516-530. Link.

[BIB3] W. Bibel (2003). Mosaiksteine einer Wissenschaft vom Geiste. Invited talk at the conference on AI and Gödel, Arnoldsheim, 4-6 April 2003. Manuscript, 2003.

[CHU] A. Church (1935). An unsolvable problem of elementary number theory. Bulletin of the American Mathematical Society, 41: 332-333. Abstract of a talk given on 19 April 1935, to the American Mathematical Society. Also in American Journal of Mathematics, 58(2), 345-363 (1 Apr 1936). [First explicit proof that the Entscheidungsproblem (decision problem) does not have a general solution.]

[TUR] A. M. Turing. On computable numbers, with an application to the Entscheidungsproblem. Proceedings of the London Mathematical Society, Series 2, 41:230-267. Received 28 May 1936. Errata appeared in Series 2, 43, pp 544-546 (1937).

[POS] E. L. Post (1936). Finite Combinatory Processes - Formulation 1. Journal of Symbolic Logic. 1: 103-105. Link.

[WA74] H. Wang (1974). From Mathematics to Philosophy, New York: Humanities Press.

[WA96] H. Wang (1996). A Logical Journey: From Gödel to Philosophy, Cambridge, MA: MIT Press.

[H79] Douglas R. Hofstadter (1979). Gödel, Escher, Bach: An Eternal Golden Braid. Basic Books, ISBN 0-465-02656-7, 1979.

[FRE] G. Frege (1879). Begriffsschrift: eine der arithmetischen nachgebildete Formelsprache des reinen Denkens. Halle an der Saale: Verlag Louis Nebert. [The first formal language / formal proofs—basis of modern logic and programming languages.]

[SKO23] T. Skolem (1923). Begründung der elementaren Arithmetik durch die rekurrierende Denkweise ohne Anwendung scheinbarer Veränderlichen mit unendlichem Ausdehnungsbereich. Skrifter utgit av Videnskapsselskapet i Kristiania, I. Mathematisk-Naturvidenskabelig Klasse 6 (1923), 38 pp.

[CAN] G. Cantor (1891). Ueber eine elementare Frage der Mannigfaltigkeitslehre. Jahresbericht der Deutschen Mathematiker-Vereinigung, 1:75-78. [English translation: W. B. Ewald (ed.). From Immanuel Kant to David Hilbert: A Source Book in the Foundations of Mathematics, Volume 2, pp. 920-922. Oxford University Press, 1996.]

[L79] G. Leibniz. De Progressione dyadica Pars I. 15 March 1679. [Principles of binary computers governed by punch cards.]

[L03] G. Leibniz (1703). In: Explication de l'Arithmetique Binaire / Die Mathematischen Schriften, ed. C. Gerhardt, Berlin 1879, vol.7, p.223. English link. [Leibniz documented the binary arithmetics which allow for greatly simplifiying computing hardware and are employed by virtually all modern computers. Binary number encodings per se, however, seem to date back over 4000 years.]

[L84] G. Leibniz (1684). Nova Methodus pro Maximis et Minimis. [First publication on infinitesimal calculus.]

[L86] G. Leibniz (1686). Generales Inquisitiones de analysi notionum et veritatum. Also in Leibniz: Die philosophischen Schriften VII, 1890, pp. 236-247; translated as "A Study in the Calculus of Real Addition" (1690) by G. H. R. Parkinson, Leibniz: Logical Papers—A Selection, Oxford 1966, pp. 131-144.

[LEI21] J. Schmidhuber (2021). 375th birthday of Leibniz, founder of computer science.

[LEI21a] J. Schmidhuber (2021). Der erste Informatiker. Wie Gottfried Wilhelm Leibniz den Computer erdachte. (The first computer scientist. How Gottfried Wilhelm Leibniz conceived the computer.) Frankfurter Allgemeine Zeitung (FAZ), 17/5/2021. FAZ online: 19/5/2021.

[BOO] George Boole (1847). The Mathematical Analysis of Logic, Being an Essay towards a Calculus of Deductive Reasoning. London, England: Macmillan, Barclay, & Macmillan, 1847.

[LL7] A. Bonner (2007). The art and logic of Ramon Llull. Brill Academic Pub, p. 290, 2007.

[RU58] B. Russell (1958). The Philosophy of Leibniz. London: George Allen and Unwin, 1958.

[LE18] W. Lenzen. Leibniz and the Calculus Ratiocinator. Technology and Mathematics, pp 47-78, Springer, 2018.

[LA14] D. R. Lande (2014). Development of the Binary Number System and the Foundations of Computer Science. The Mathematics Enthusiast, vol. 11(3):6 12, 2014. Link.

[BL16] L. Bloch (2016). Informatics in the light of some Leibniz's works. Communication to XB2 Berlin Xenobiology Conference.

[HO66] E. Hochstetter et al. (1966): Herrn von Leibniz' Rechnung mit Null und Eins. Berlin: Siemens AG.

[IN08] R. Ineichen (2008). Leibniz, Caramuel, Harriot und das Dualsystem. Mitteilungen der deutschen Mathematiker-Vereinigung. 16(1):12-15.

[SH51] J. W. Shirley (1951). Binary Numeration before Leibniz. American Journal of Physics 19 (452-454).

[WI48] N. Wiener (1948). Time, communication, and the nervous system. Teleological mechanisms. Annals of the N.Y. Acad. Sci. 50 (4): 197-219. [Quote: "The history of the modern computing machine goes back to Leibniz and Pascal. Indeed, the general idea of a computing machine is nothing but a mechanization of Leibniz's calculus ratiocinator."]

[SMO13] L. Smolin (2013). My hero: Gottfried Wilhelm von Leibniz. The Guardian, 2013. Link. [Quote: "And this is just the one part of Leibniz's enormous legacy: the philosopher Stanley Rosen called him "the smartest person who ever lived"."]

[T20] J. Schmidhuber (2020). Critique of 2018 Turing Award.

[HIN] J. Schmidhuber (2020). Critique of 2019 Honda Prize.

[GOD21] J. Schmidhuber (2021). 90th anniversary celebrations: 1931: Kurt Gödel, founder of theoretical computer science, shows limits of math, logic, computing, and artificial intelligence

[GOD21a] J. Schmidhuber (2021). Als Kurt Gödel die Grenzen des Berechenbaren entdeckte. (When Kurt Gödel discovered the limits of computability.) Frankfurter Allgemeine Zeitung, 16/6/2021.

[ALL2] J. Schmidhuber (2000). Algorithmic theories of everything. ArXiv: quant-ph/ 0011122. More. See also: Hierarchies of generalized Kolmogorov complexities and nonenumerable universal measures computable in the limit. International Journal of Foundations of Computer Science 13(4):587-612, 2002. PDF. More. See also: The Speed Prior: A New Simplicity Measure Yielding Near-Optimal Computable Predictions. Proc. COLT 2002. PDF. More.

[GM6] J. Schmidhuber (2006). Gödel machines: Fully Self-Referential Optimal Universal Self-Improvers. In B. Goertzel and C. Pennachin, eds.: Artificial General Intelligence, p. 199-226, 2006. PDF. Preprint arXiv:cs/0309048 (2003). See also: Ultimate Cognition à la Gödel. Cognitive Computation 1(2):177-193, 2009. PDF. More.

[GM6] J. Schmidhuber (2006). Gödel machines: Fully Self-Referential Optimal Universal Self-Improvers. In B. Goertzel and C. Pennachin, eds.: Artificial General Intelligence, p. 199-226, 2006. PDF. Preprint arXiv:cs/0309048 (2003). See also: Ultimate Cognition à la Gödel. Cognitive Computation 1(2):177-193, 2009. PDF. More.

[DL1] J. Schmidhuber, 2015. Deep Learning in neural networks: An overview. Neural Networks, 61, 85-117. More.

[DL2] J. Schmidhuber, 2015. Deep Learning. Scholarpedia, 10(11):32832.

[MIR] J. Schmidhuber (2019). Deep Learning: Our Miraculous Year 1990-1991. Preprint arXiv:2005.05744, 2020.

[DEC] J. Schmidhuber (2020). The 2010s: Our Decade of Deep Learning / Outlook on the 2020s.

[VAR13] M. Y. Vardi (2013). Who begat computing? Communications of the ACM, Vol. 56(1):5, Jan 2013. Link.

[ZU36] K. Zuse (1936). Verfahren zur selbsttätigen Durchführung von Rechnungen mit Hilfe von Rechenmaschinen. Patent application Z 23 139 / GMD Nr. 005/021, 1936. [First patent application describing a general, practical, program-controlled computer.]

[ZU37] K. Zuse (1937). Einführung in die allgemeine Dyadik. [Mentions the storage of program instructions in the computer's memory.]

[ZU38] K. Zuse (1938). Diary entry of 4 June 1938. [Description of computer architectures that put both program instructions and data into storage—compare the later "von Neumann" architecture.[NEU45]]

[ZU48] K. Zuse (1948). Über den Plankalkül als Mittel zur Formulierung schematisch kombinativer Aufgaben. Archiv der Mathematik 1(6), 441-449 (1948). PDF. [Apparently the first practical design of an automatic theorem prover (based on Zuse's high-level programming language Plankalkül).]

[NS56] A. Newell and H. Simon. The logic theory machine—A complex information processing system. IRE Transactions on Information Theory 2.3 (1956):61-79.

[RO98] R. Rojas (1998). How to make Zuse's Z3 a universal computer. IEEE Annals of Computing, vol. 19:3, 1998.

[BAU] F. L. Bauer, H. Woessner (1972). The "Plankalkül" of Konrad Zuse: A Forerunner of Today's Programming Languages.

[KNU] D. E. Knuth, L. T. Pardo (1976). The Early Development of Programming Languages. Stanford University, Computer Science Department. PDF.

[Z36] S. Faber (2000). Konrad Zuses Bemühungen um die Patentanmeldung der Z3.

[SHA37] C. E. Shannon (1938). A Symbolic Analysis of Relay and Switching Circuits. Trans. AIEE. 57 (12): 713-723. Based on his thesis, MIT, 1937.

[AI51] Les Machines a Calculer et la Pensee Humaine: Paris, 8.-13. Januar 1951, Colloques internationaux du Centre National de la Recherche Scientifique; no. 37, Paris 1953. [H. Bruderer rightly calls that the first conference on AI.]

[BRU1] H. Bruderer. Computing history beyond the UK and US: selected landmarks from continental Europe. Communications of the ACM 60.2 (2017): 76-84.

[BRU2] H. Bruderer. Meilensteine der Rechentechnik. 2 volumes, 3rd edition. Walter de Gruyter GmbH & Co KG, 2020.

[BRU3] H. Bruderer. Milestones in Analog and Digital Computing. 2 volumes, 3rd edition. Springer Nature Switzerland AG, 2020.

[BRU4] H. Bruderer. The Birthplace of Artificial Intelligence? Communications of the ACM, BLOG@CACM, Nov 2017. Link.

[BRO21] D. C. Brock (2021). Cybernetics, Computer Design, and a Meeting of the Minds. An influential 1951 conference in Paris considered the computer as a model of—and for—the human mind. IEEE Spectrum, 2021. Link.

[BAN] Banu Musa brothers (9th century). The book of ingenious devices (Kitab al-hiyal). Translated by D. R. Hill (1979), Springer, p. 44, ISBN 90-277-0833-9. [Perhaps the Banu Musa music automaton was the world's first machine with a stored program.]

[KOE1] [21] T. Koetsier (2001). On the prehistory of programmable machines: musical automata, looms, calculators. Mechanism and Machine Theory, Elsevier, 36 (5): 589-603, 2001.

[RAU1] M. Rausch. Heron von Alexandria. Die Automatentheater und die Erfindung der ersten antiken Programmierung. Diplomica Verlag GmbH, Hamburg 2012. [Perhaps the world's first programmable machine was an automatic theatre made in the 1st century by Heron of Alexandria, who apparently also had the first known working steam engine.]

[SHA7a] N. Sharkey (2007). A programmable robot from AD 60. New Scientist, Sept 2017.

[SHA7b] N. Sharkey (2007). A 13th Century Programmable Robot. Univ. of Sheffield, 2007. [On a programmable drum machine of 1206 by Al-Jazari.]

[LIL1] US Patent 1745175 by Austrian physicist Julius Edgar Lilienfeld for work done in Leipzig: "Method and apparatus for controlling electric current." First filed in Canada on 22.10.1925. [The patent describes a field-effect transistor. Today, almost all transistors are field-effect transistors.]

[LIL2] US Patent 1900018 by Austrian physicist Julius Edgar Lilienfeld: "Device for controlling electric current." Filed on 28.03.1928. [The patent describes a thin film field-effect transistor.]

.

They did not exploit this back then—for example, in 1936, Turing used his (quite inefficient) model only to rephrase the results of Gödel and Church on the limits of computability. Later, however, the simplicity of these machines made them a convenient tool for theoretical studies of complexity. (I also happily used and generalized them for the case of never-ending computations.

They did not exploit this back then—for example, in 1936, Turing used his (quite inefficient) model only to rephrase the results of Gödel and Church on the limits of computability. Later, however, the simplicity of these machines made them a convenient tool for theoretical studies of complexity. (I also happily used and generalized them for the case of never-ending computations.